This is a simple POC, how to configure Kafka and integrate it with Pega.

Version: Pega PE 8.5.1.

Demo Video:

Follow the below steps and you should be able to do it by yourself 🙂

Download and install the following:

- Download Server JRE according to your OS and CPU architecture from http://www.oracle.com/technetwork/java/javase/downloads/jre8-downloads-2133155.html

- Download and install 7-zip from http://www.7-zip.org/download.html

- Download and extract ZooKeeper using 7-zip from http://zookeeper.apache.org/releases.html

- Download and extract Kafka using 7-zip from http://kafka.apache.org/downloads.html

Here, we are using full-fledged ZooKeeper and not the one packaged with Kafka because it will be a single-node ZooKeeper instance. If you want, you can run Kafka with a packaged ZooKeeper located in a Kafka package inside the \kafka\bin\windows directory.

Installation

A. JDK Setup

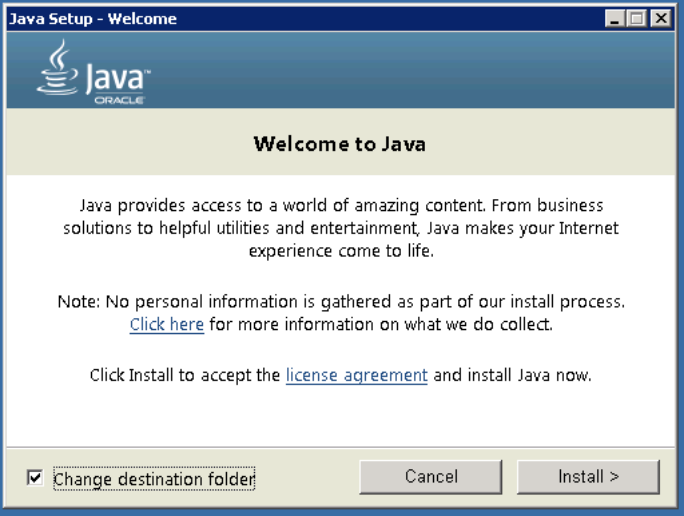

1. Start the JRE installation and hit the “Change destination folder” checkbox, then click ‘Install.’

- Change the installation directory to any path without spaces in the folder name. E.g. C:\Java\jre1.8.0_xx\. (By default it will be C:\Program Files\Java\jre1.8.0_xx), then click ‘Next.’

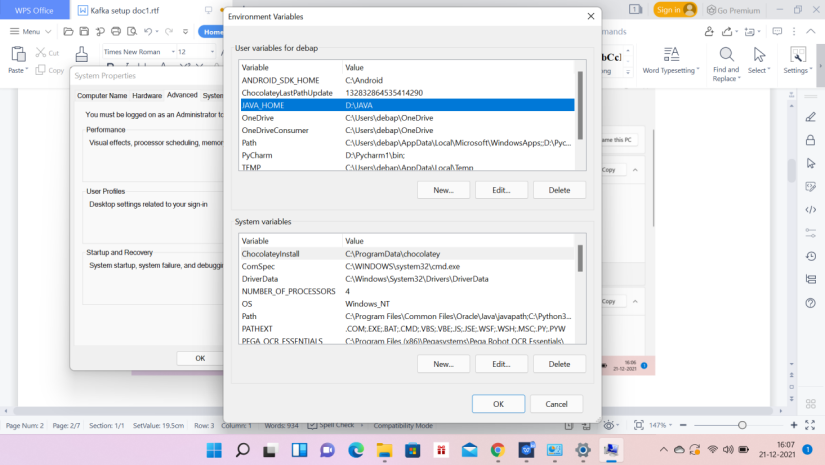

I have installed JDK in D:\JAVA

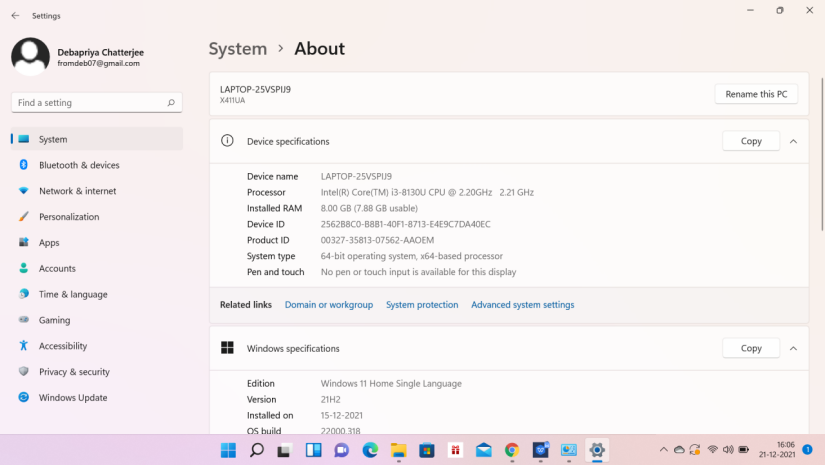

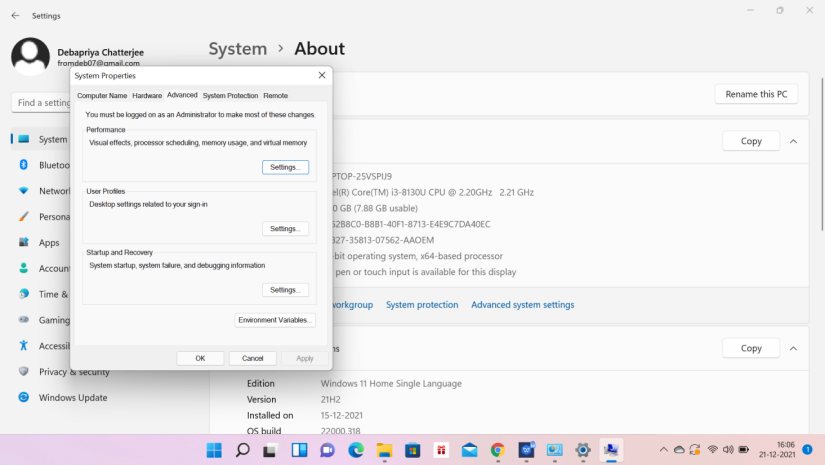

Now open the system environment variables dialogue by opening Control Panel -> System -> Advanced system settings -> Environment Variables.

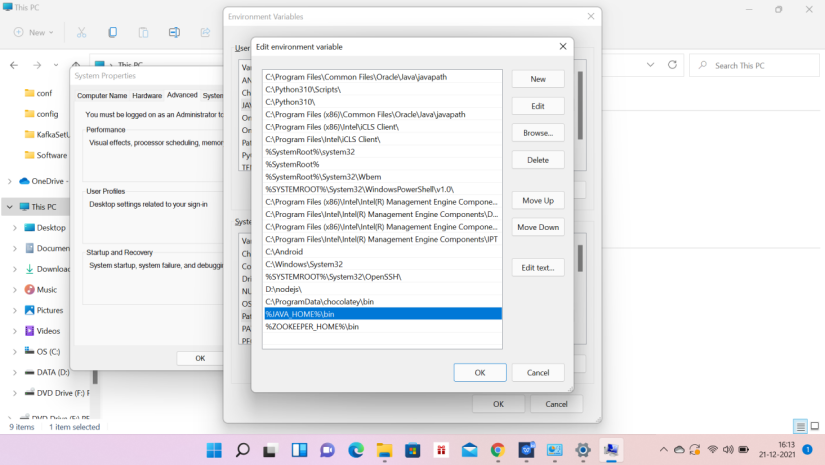

Hit the New User Variable button in the User variables section, then type JAVA_HOME in Variable name and give your jre path in the Variable value. It should look like the below image:

Now Click OK.

Search for a Path variable in the “System Variable” section in the “Environment Variables” dialogue box you just opened.

Edit the path and type “;%JAVA_HOME%\bin” at the end of the text already written there, just like the image below: click edit text

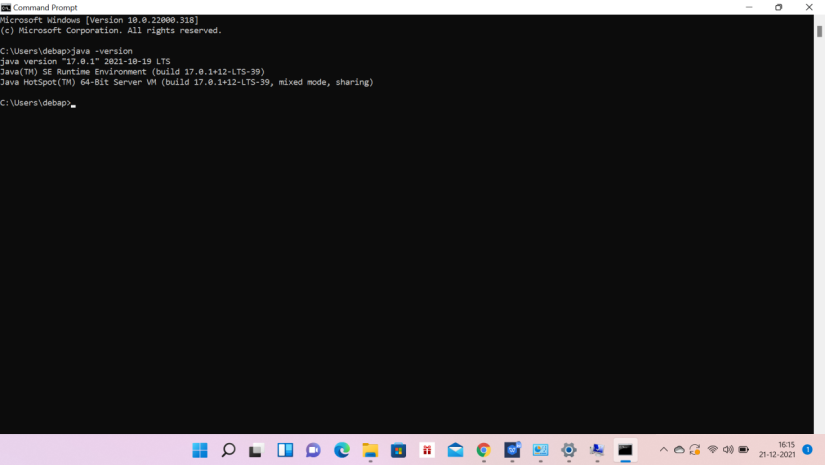

To confirm the Java installation, just open cmd and type “java –version.” You should be able to see the version of Java you just installed.

If your command prompt somewhat looks like the image above, you are good to go. Otherwise, you need to recheck whether your setup version matches the correct OS architecture (x86, x64), or if the environment variables path is correct.

B. ZooKeeper Installation

1. Go to your ZooKeeper config directory. For me its D:\KafkaSetUp\zookeeper1\conf

2. Rename file “zoo_sample.cfg” to “zoo.cfg”

3. Open zoo.cfg in any text editor, like Notepad;

4. Find and edit dataDir=/tmp/zookeeper to dataDir=D:/KafkaSetUp/zookeeper1/data

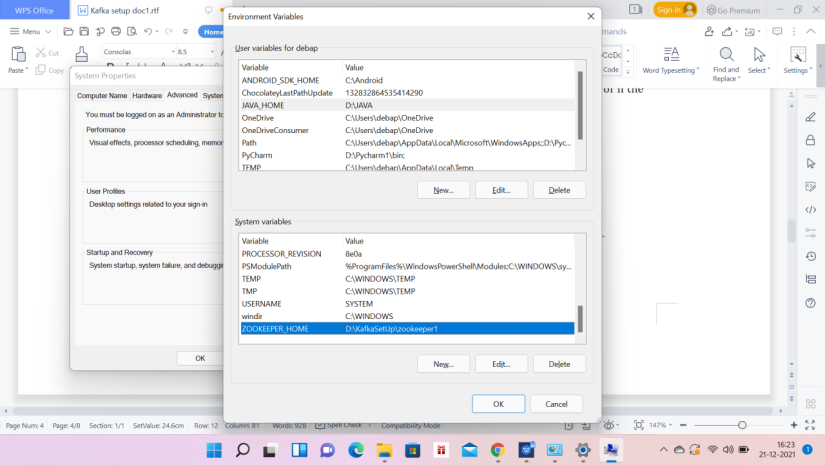

5. Add an entry in the System Environment Variables as we did for Java.

Add ZOOKEEPER_HOME = D:\KafkaSetUp\zookeeper1 to the System Variables.

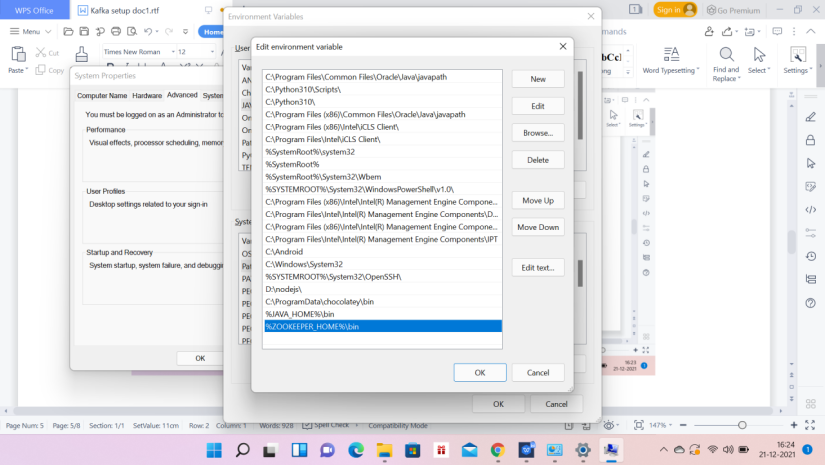

Edit the System Variable named “Path” and add ;%ZOOKEEPER_HOME%\bin;

- You can change the default Zookeeper port in zoo.cfg file (Default port 2181).

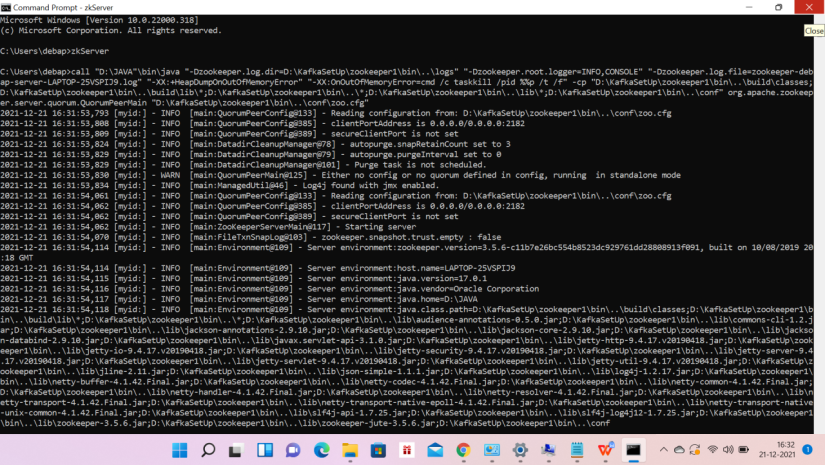

Run ZooKeeper by opening a new cmd window and type zkServer.

You will see the command prompt with some details, like the image below:

Setting Up Kafka

1. Go to your Kafka config directory. For me its D:\KafkaSetUp\kafka\kafka_2.12-2.8.1\config

2. Edit the file “server.properties.”

3. Find and edit the line log.dirs=/tmp/kafka-logs” to “log.dir= D:\KafkaSetUp\kafka\kafka_2.12-2.8.1\kafka-logs

4. If your ZooKeeper is running on some other machine or cluster you can edit “zookeeper.connect:2181” to your custom IP and port. For this demo, we are using the same machine so there’s no need to change. Also the Kafka port and broker.id are configurable in this file. Leave other settings as is.

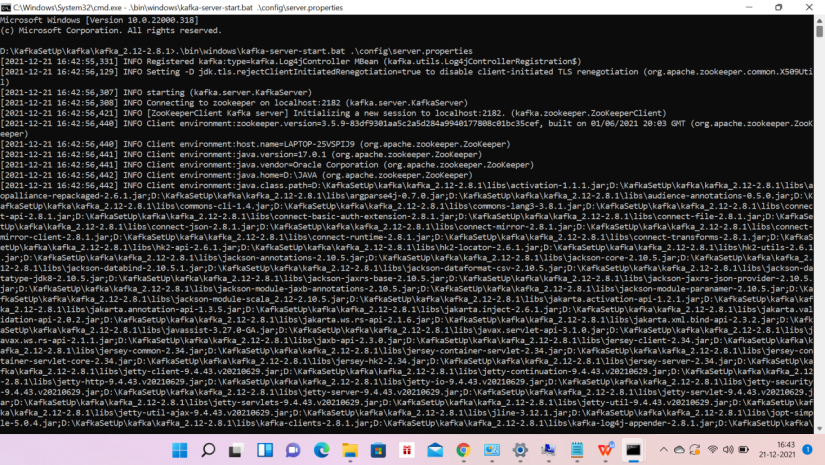

Running a Kafka Server

Important: Please ensure that your ZooKeeper instance is up and running before starting a Kafka server.

1. Go to your Kafka installation directory: D:\KafkaSetUp\kafka\kafka_2.12-2.8.1

2. Open a command prompt by typing cmd on the path bar and press enter. It will open a new command prompt with the above path

3. Now type .\bin\windows\kafka-server-start.bat .\config\server.properties and press Enter.

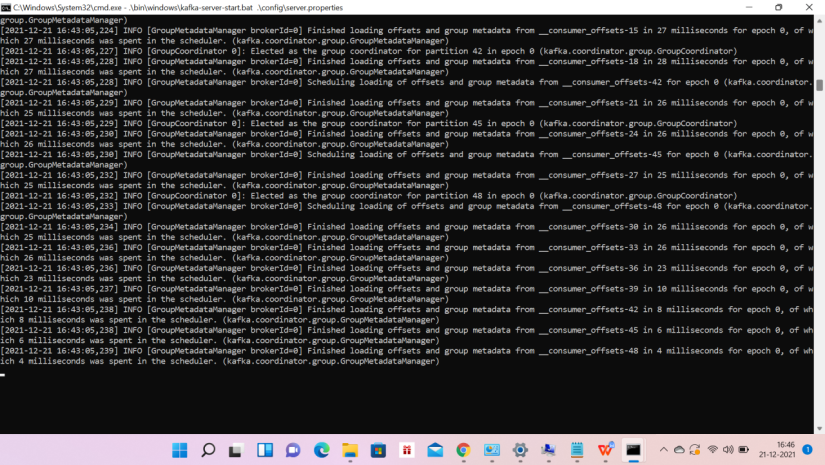

If everything went fine, your command prompt will look like this:

Cursor should blink at the bottom, Otherwise, if the prompt comes with the directory, then there is an issue. IF you see log directories have failed then go to the path D:\KafkaSetUp\kafka\kafka_2.12-2.8.1\kafka-logs

And delete all the log files — shift+delete and try to start the Kafka server again.

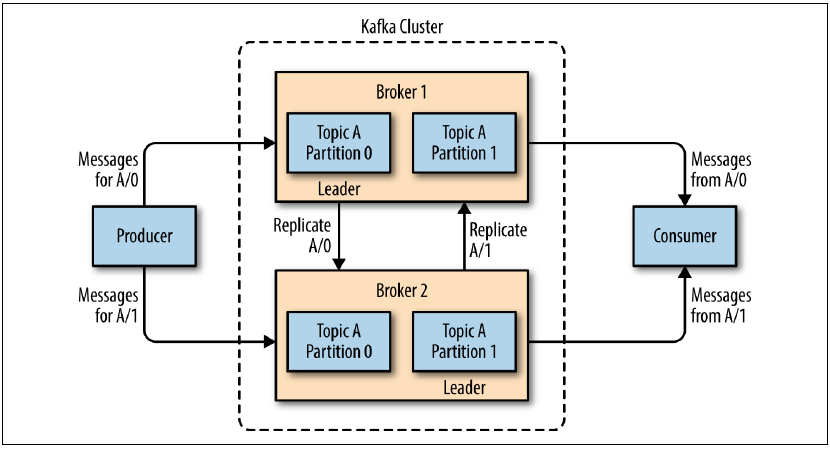

Now your Kafka Server is up and running, you can create topics to store messages. Also, we can produce or consume data from Java or Scala code or directly from the command prompt.

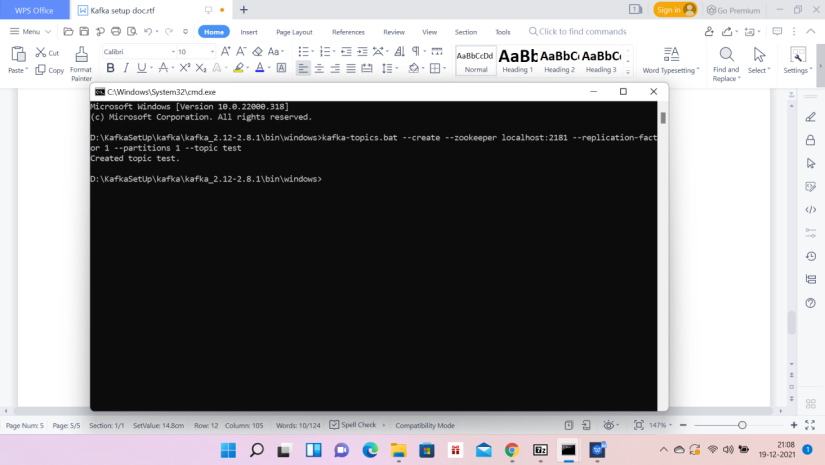

Creating Topics

1. Now create a topic with the name “test” and a replication factor of 1, as we have only one Kafka server running. If you have a cluster with more than one Kafka server running, you can increase the

replication-factor accordingly, which will increase the data availability and act like a fault-tolerant system.

2. Open a new command prompt in the location D:\KafkaSetUp\kafka\kafka_2.12-2.8.1\bin\windows.

3. Type the following command and hit Enter:

kafka-topics.bat –create –zookeeper localhost:2182 –replication-factor 1 –partitions 1 –topic testtopic3

<Below screen shot is of topic text>

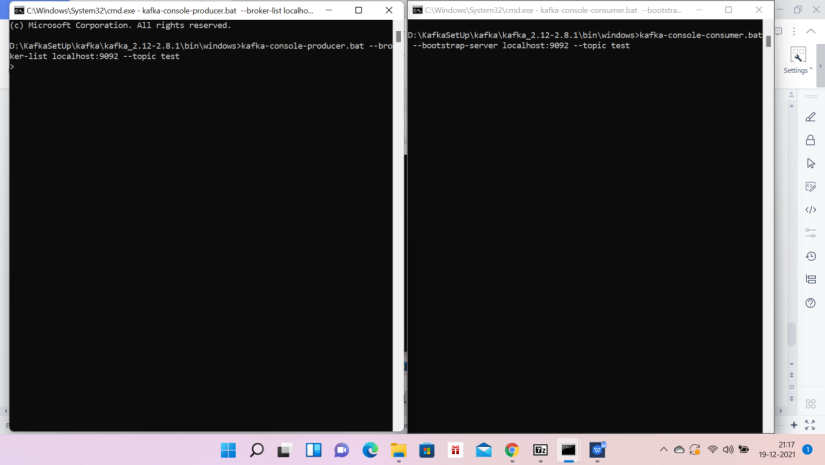

Creating a Producer and Consumer to Test Server

1. Open a new command prompt in the location D:\KafkaSetUp\kafka\kafka_2.12-2.8.1\bin\windows

2. To start a producer type the following command:

kafka-console-producer.bat –broker-list localhost:9093 –topic testtopic3

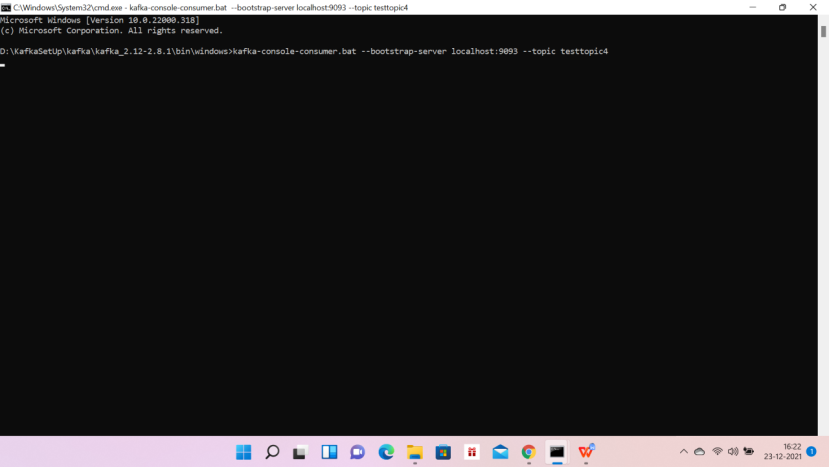

3. Again open a new command prompt in the same location as D:\KafkaSetUp\kafka\kafka_2.12-2.8.1\bin\windows

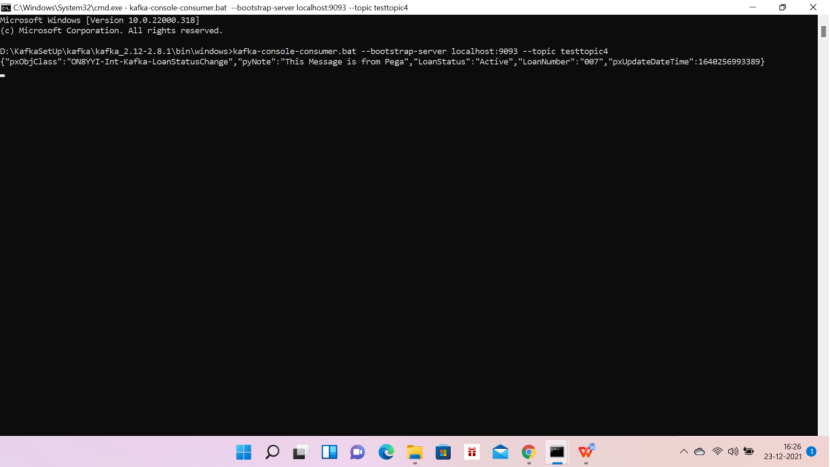

4. Now start a consumer by typing the following command:

Before kafka version 2.0 (<2.0):

kafka-console-consumer.bat –zookeeper localhost:2181 –topic test

After kafka version 2.0 (>= 2.0):

kafka-console-consumer.bat –bootstrap-server localhost:9092 –topic test

For me this worked:

kafka-console-consumer.bat –bootstrap-server localhost:9093 –topic testtopic3

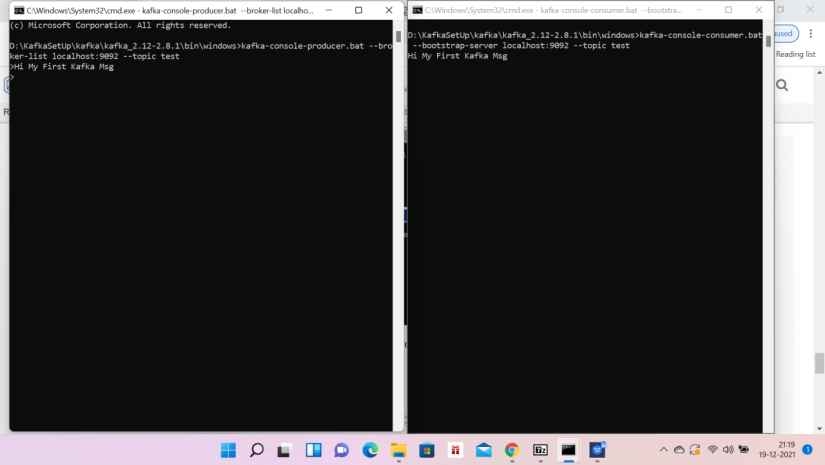

- Now you will have two command prompts, like the image below:

<Screen shots are for topic test>

Now type anything in the producer command prompt and press Enter, and you should be able to see the message in the other consumer command prompt.

Now write a message in producer left hand window, same will be available in the right hand window in the consumer

If you are able to push and see your messages on the consumer side, you are done with Kafka setup.

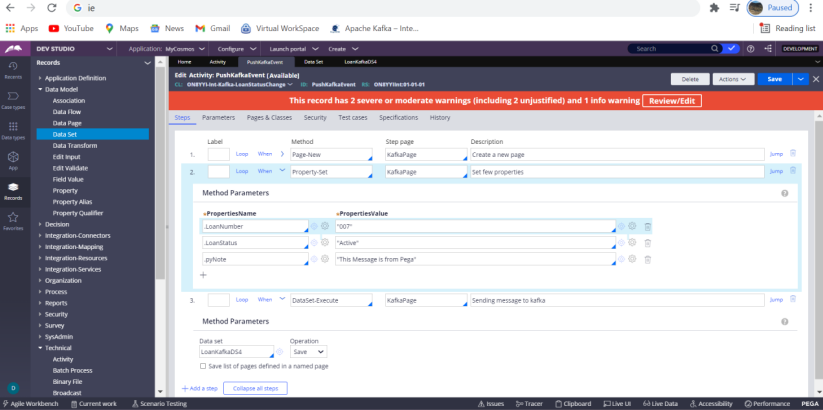

Pega Configuration:

Start your Pega Personal Edition 8.5.1

Create a class and couple of properties

- LoanNumber 2. LoanStatus

ONBYYI-Int-Kafka-LoanSatusChange class

And add two properties in that class LoanNumber and LoanStatus

Use ruleset: ONBYYIInt

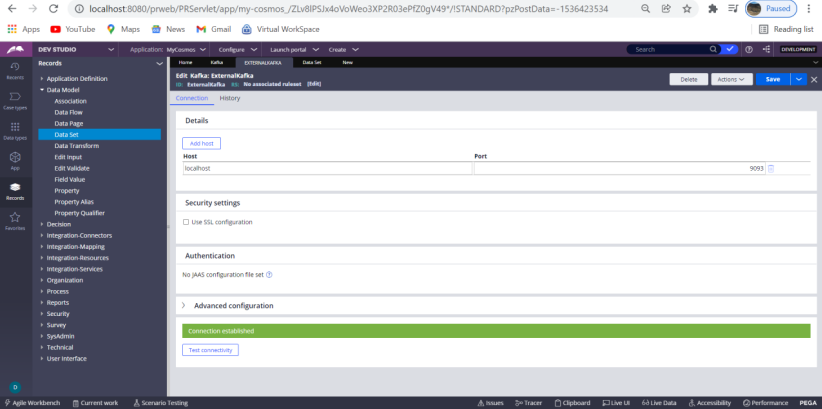

Create a kafka rule: Go to SysAdmin – Kafka

ID: ExternalKafka

Host: localhost

Port:9093

Do test connection

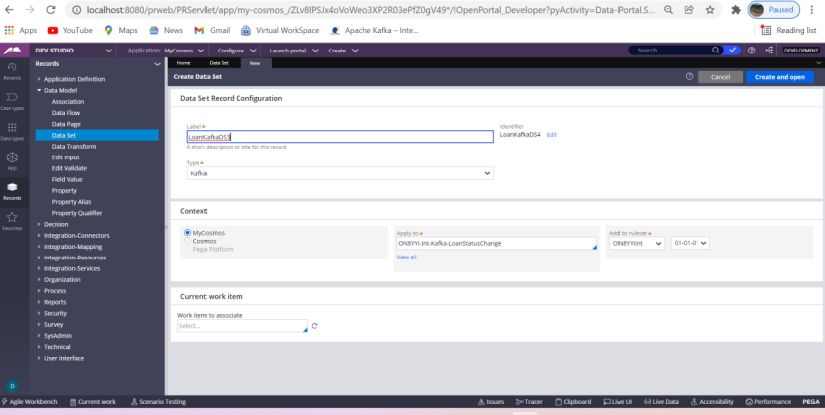

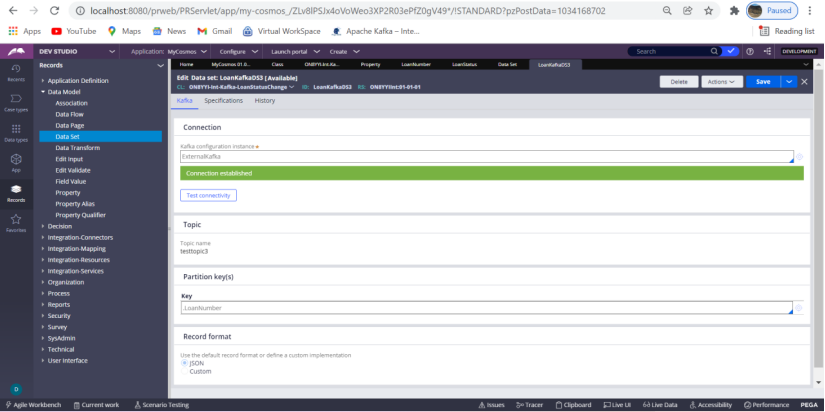

- Create a Data set: LoanKafkaDS3

Use Topic: testtopic3

While creation of the Data set you will get the topic name in the drop down. That means your connection is successful. Choose from the drop down.

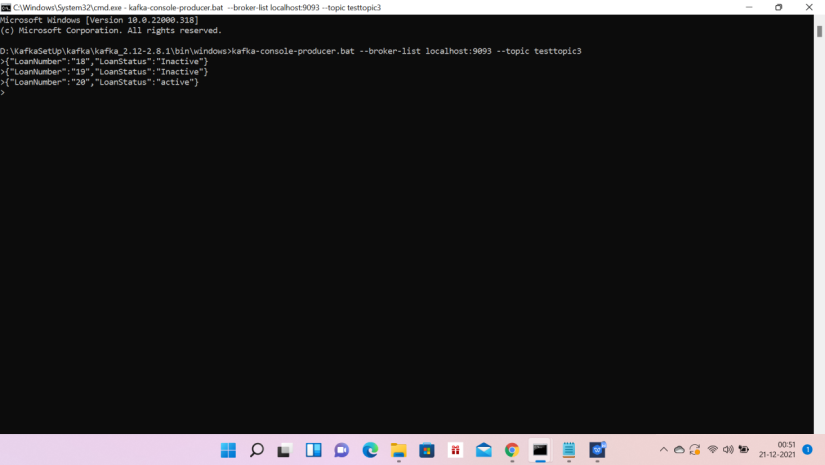

Run the producer and send the following messages, testtopic3 has been used

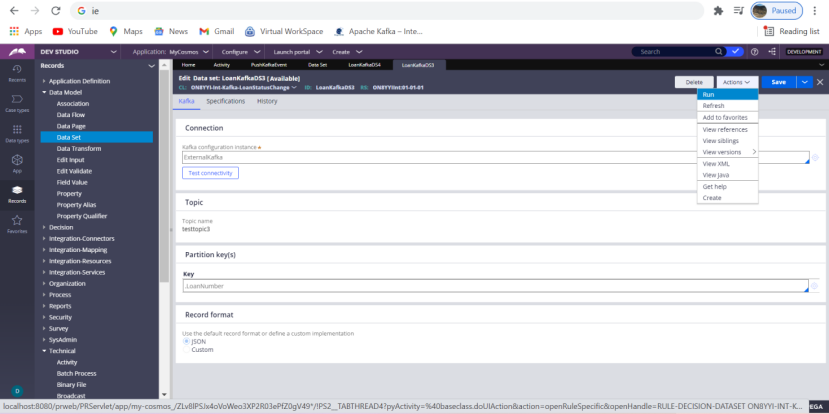

Do not open a consumer window, go to Pega and run the Data Set LoanKafkaDS3

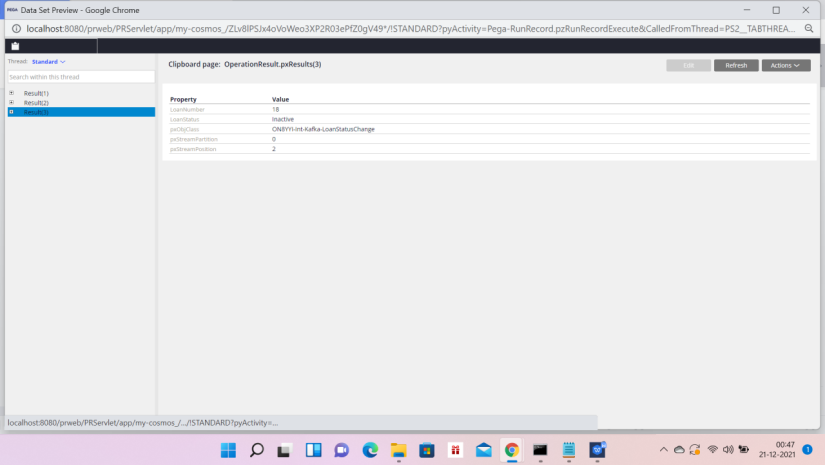

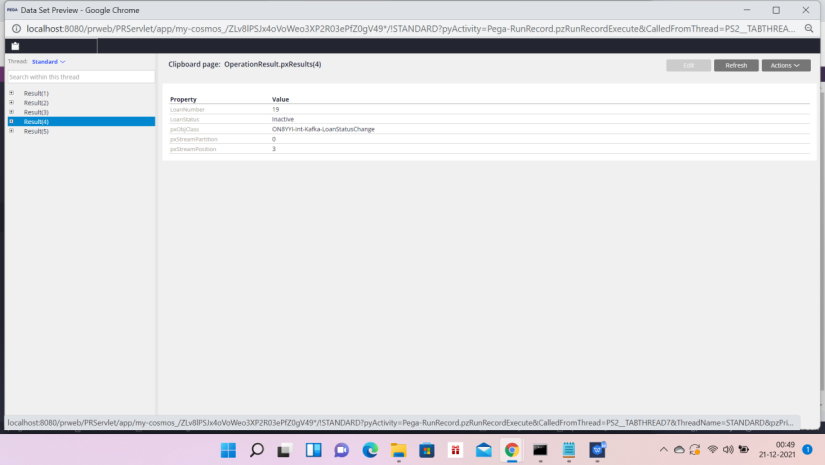

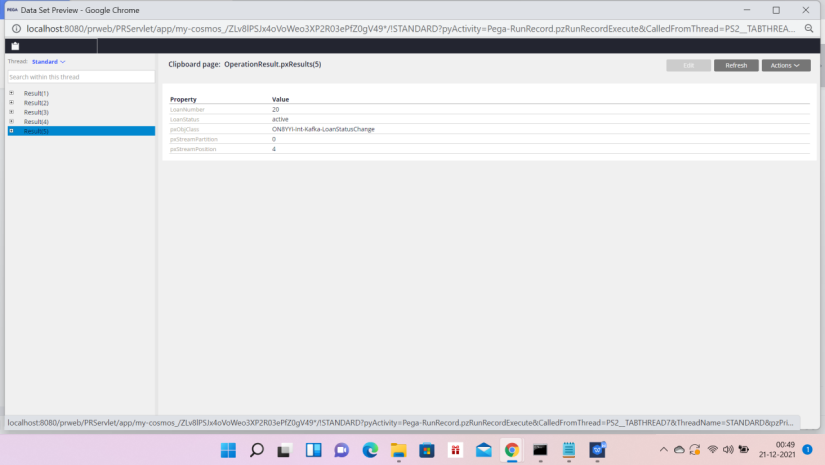

Run the Data Set: LoanKafkaDS3 and check the OperationResult in clipboard

Check the data what you have send from the producer. If Data Set does not run and show an error, ‘please contact administrator’ then please close the producer and then create a new topic and then start the producer and then create a new Data Set with the new topic and then run the Data Set. This applies for any wrong data you send from the producer. Then also you may get an error and then next time it will not work. So create an new topic and new Data Set with the new topic.

So data came successfully from Kakfa (Producer) to Pega (Consumer)

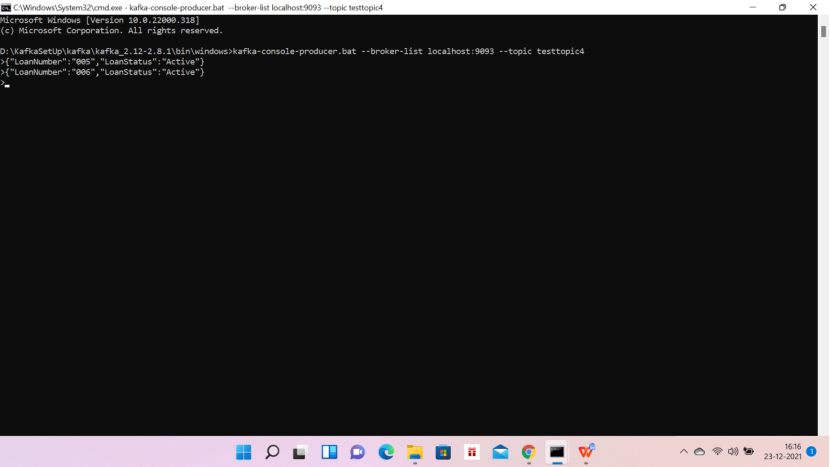

Another Scenario: Using testtopic4 and LoanKafkaDS4

Producer is sending a message:

{“LoanNumber”:”006”, “LoanStatus”:”Active”}

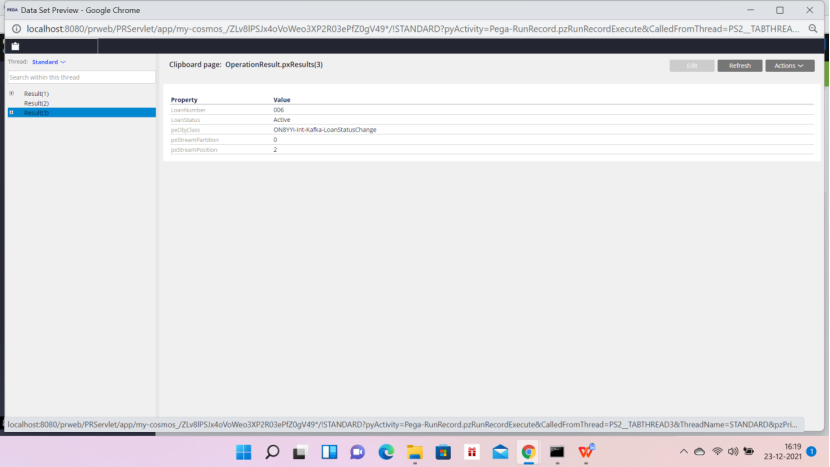

Go the Pega and run LoanKafkaDS4 Data Set.

LoanNumber:006 is in Pega

So data came successfully from Kakfa (Producer) to Pega (Consumer)

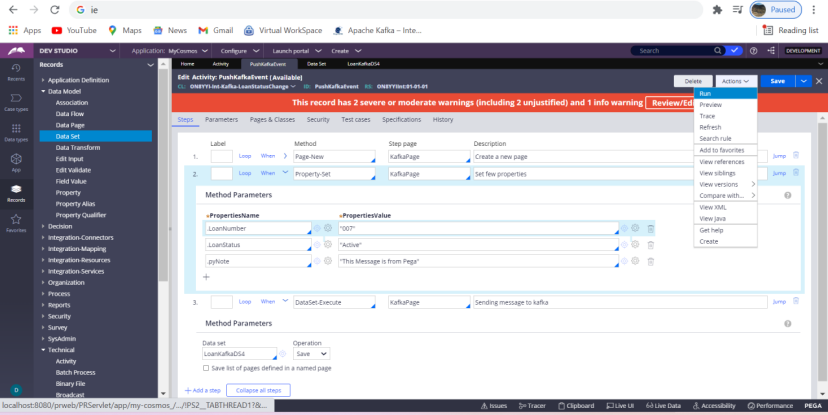

Now push a message from Pega (Producer) to Kafka (Consumer) by running the Data set through a Pega activity.

Start the consumer on testtopic4

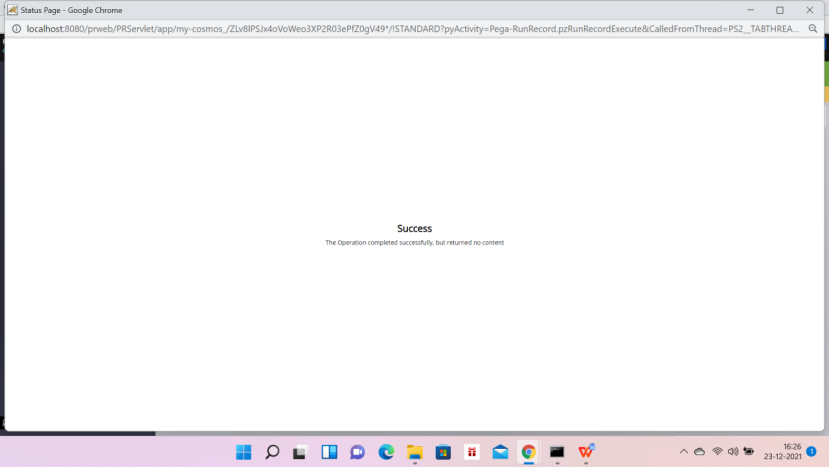

Now run the Pega activity

Check the data, LoanNumber:007 has been successfully posted to Kafka from Pega.

Congratulations !!! You have successfully configured Kafka and integrated it with Pega PE.

Pat on your back 😉

Demo Video: Kafka (Producer) to Pega (Consumer)

Demo Video: Pega (Producer) to Kafka (Consumer)

Very clear explanation.it is crystal clear.thank you

Thanks Teja.

Nicely explained, Blindly followed the steps and it worked as is. Super! Thanks for sharing. Keep sharing.

Thanks a lot Aishwarya. Glad that you tried by yourself and it worked.

Nice article, Neatly explained with screenshots. Also @deb can you please write or share some article on handling pega application performance issues and best possible ways to handle it ..

Thanks Nitish for your suggestion.

Nice explanation …Clearly articulated and was very helpful..

Thanks Sneha. Do visit again so that you get the latest posts.

😊 sure..👍

Very nice article . It’s very detailed and described simply . Loved it.Eager to see more.

Thanks Arnab. Will definitely post more POCs.

Very good concept with very clear instructions.plz do more videos

Thanks Pradeep. Surely will post more videos

Hi Deba, You are making things simple and clear with your detailed explanations . Keeping going great work. Thank you

Thanks Venu.